- AI writing tools and AI imaging tools such as Mid-Journey are rapidly changing the world of work and study.

- Deakin University discovered bots are being used in 20% of assessments.

- However since AI writing tools are trained to provide answers that feel right to humans, the answers that are provided from ChatGPT can trick humans that the output is correct.

You have probably read about or used the long-form question-answering artificial intelligence (AI) called ChatGPT — it’s everywhere at the moment and seems to be ensnaring public imagination with its human-like responses (only faster) to questions being asked by millions of users.

The idea of this technology is that it learns what its users want. It is revolutionary.

Microsoft, one of the initial investors in OpenAI, the parent company of ChatGPT, is looking at integrating it with Microsoft Windows and Skype. Presumably you won’t have to type that letter any more, you’ll ask ChatGPT and your Windows document will appear as if by magic.

The implications of any new technology are not always clear at the outset. However, AI writing tools and AI imaging tools such as Mid-Journey are rapidly changing the world of work and study.

Image created on Midjourney by IFIS Publishing

Lecturers and universities on red alert

We have already seen universities start to change the way they are assessing students to take into account the use of ChatGPT. One academic at Deakin University has already discovered bots being used in 20% of assessments.

Academics and course leaders have already begun to change coursework assignments to take into account the use of software like ChatGPT.

Ideas to thwart ChatGPT include students having to rough draft in class where internet access can be controlled and subsequent revisions having to be explained and reasoned.

Student essays may be relatively easy to control. But what about research?

Will the majority of students continue to do the research themselves, delve deeply into scientific literature and synthesise and present their own ideas? The answer to this most probably, “yes”.

There have always been ways to “cheat” and many students avail themselves of essay writing services, either online or by other students, paying a pretty price to get the work done. ChatGPT may make it easier and cheaper and that’s something that’s going to exacerbate the problem.

Could ChatGPT help students do better?

It’s possible that academics could change assessments and even allow the use of AI tools to do repetitive and routine tasks, allowing more time for high-level thinking.

It may be that ChatGPT can be helpful in the same way a scientific calculator has become an essential tool. For example, generating ideas for related concepts, terms, and words about a particular topic. A blog post at Duke University makes this point well.

Our highly popular Literature Searching Best Practice Guide now includes a new chapter on how to effectively and ethically use AI tools for academic research and writing. Take a look!

It’s advisable not to use ChatGPT to summarise academic papers. This could lead to a temptation to cite things without actually having read them, or to try to use the information stripped of its context. Both of these are poor practices that members of the IFIS Faculty Advisory Board said — even before Chat GPT was developed — that students and researchers should be aware of and avoid.

What happens if people use the technology to create academic research papers?

In a world where so much of the content on the internet is fake or in the case of scientific published works, predatory, it does not take a giant leap to realise that research papers and abstracts can be written quickly and at zero cost at this current time.

In a recent test, ChatGPT wrote abstracts that plainly fooled experienced scientists, despite the fact they were warned some were written by the AI machine - See here.

The next step will surely be research papers authored by ChatGPT with full abstracts, uploaded to predatory journals. Maybe this will happen with full acknowledgement of authorship by ChatGPT. Maybe not.

It is entirely possible to create your scientific status based on fake, AI-generated research using predatory journals. Has it been done? Who knows?

The question whether AI tools like ChatGPT can be named as an author of a research paper has already created new policy at the arXiv blog. They have clearly stated that a computer program cannot take responsibility for the contents of a paper. Nor can it agree to arXiv’s terms and conditions. It remains to be seen how journals and other forms of publishing react.

There are limitations on what ChatGPT can currently do

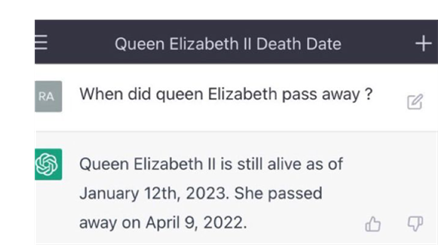

At the moment, ChatGPT cannot access information after 2021. It does not have access to all the information on the internet or behind paywalls.

When you ask the AI whether it can access academic journals, it has this to say for itself;

“I am not able to access academic journals directly. However, I can provide information that may be found in academic journals if it is within my training data. Additionally, I can provide summaries of papers and articles.”

The conversational nature of the software naturally lends you to ask another question. We asked ChatGPT…

Is it possible you use incorrect information that is to be found in your training data?

Yes, it is possible for a language model like me to use incorrect information that is present in my training data. Because my training data is sourced from the internet, it may contain inaccuracies, biases, or outdated information. Additionally, I am a machine learning model and can make mistakes in my understanding of text.

ChatGPT answers are not always correct

Since it is trained to provide answers that feel right to humans, the answers from ChatGPT can trick humans that the output is correct.

And whilst simple errors are obvious, (most people realise that Queen Elizabeth II is dead and the ChatGPT answer is plainly wrong), there may be errors to more nuanced answers or in replies to complicated subjects.

This makes relying on a friendly chat to make decisions on what research to do, and how to present it, very unwise.

It is tempting to take shortcuts. But ChatGPT results could mean you get entirely the wrong information. The consequence is that you start your journey going in the wrong direction.

Another factor when looking at using ChatGPT is that the quality of the output depends entirely on the quality of the input. In other words, expert directions (prompts) generate much better answers. And how do you ask the right questions?

By having developed your research skills in the first place.

Critical research skills are one of the important things to teach students and experienced food scientists alike

It is why we spend a lot of time at IFIS Publishing on providing resources in the science of food and health to assist students, researchers and faculty. Our role is to help people find the right information that has been verified by our experts. This is what truly saves time, effort and money.

There may be a time when AI can guarantee results are error free and relevant. It may even be possible to obtain data from journals, conference proceedings and books that are paid for subscription products.

The more likely scenario for researchers and students is that AI will become a widely-used and integrated tool, very much like scientific calculators, laptops and databases.

And it is up to everyone concerned with quality science to ensure that this tool complements, rather than replaces, human intelligence.

Or in the words of ChatGPT…

“A specialist database that has been verified by humans is more likely to contain accurate and trustworthy information compared to databases that rely solely on automated processes.

Human verification ensures that the information in the database is up-to-date, relevant and unbiased, which makes it more useful for research and academic purposes.”

The machine has spoken.